Module 4 Assignment¶

A few things you should keep in mind when working on assignments:

Make sure you fill in any place that says

YOUR CODE HERE. Do not write your answer in anywhere else other than where it saysYOUR CODE HERE. Anything you write anywhere else will be removed or overwritten by the autograder.Before you submit your assignment, make sure everything runs as expected. Go to menubar, select Kernel, and restart the kernel and run all cells (Restart & Run all).

Do not change the title (i.e. file name) of this notebook.

Make sure that you save your work (in the menubar, select File → Save and CheckPoint)

import numpy as np

from nose.tools import assert_equal, assert_almost_equal, assert_true

import pandas as pd

Problem 1: A function that creates an array¶

Write a function that takes in a list of numbers and outputs a numpy array

def array_maker(my_list):

"""

Input

------

my_list: a list of numbers

Output

-------

my_array: a numpy array created from my_list

"""

### YOUR CODE HERE

return my_array

assert_equal(type(array_maker([1,2,3])), np.ndarray)

assert_equal(array_maker([1,2,3])[0], 1)

assert_equal(array_maker([1,2,3])[1], 2)

assert_equal(array_maker([1,2,3])[2], 3)

Problem 2: Summarizing random data¶

Write a function which takes in an integer $n$, generates $n$ uniform[0,1) random numbers, and then outputs their average.

def average_calculator(n):

"""

Input

------

n: an integer

Output

-------

avg: the average of n uniform[0,1) random numbers

"""

### YOUR CODE HERE

return avg

assert_almost_equal(average_calculator(10000), .5, places=1)

Problem 3: Reading data¶

Write a function which reads in a comma delimited datafile with only 1 row and saves it as an array called "data".

Tip: In this example, the "usecols" argument should not be used.

def array_reader(file_name):

"""

Input

------

file_name: a string, the name of the file to be read

Output

-------

saved_array: a numpy array

"""

### YOUR CODE HERE

return data

assert_equal(type(array_reader('data_file.txt')), np.ndarray)

assert_equal(array_reader('data_file.txt')[9], 10)

For the next few cells we will use the iris dataset, focusing only on the setosa species.

iris_data = pd.read_csv('iris.csv')

iris_data.head()

setosa_data = iris_data.loc[iris_data['class'] == 'Iris-setosa']

Problem 4:Finding the median¶

Write a function that takes in a column name from the setosa_data dataset and outputs the median value of that column.

def median_finder(column_name):

"""

Input

------

column_name: a string, the name of the column

Output

-------

median: the median value of the column

"""

### YOUR CODE HERE

return median

assert_equal(median_finder('sepal length (in cm)'), 5.0)

assert_equal(median_finder('sepal width (in cm)'), 3.4)

assert_equal(median_finder('petal length (in cm)'), 1.5)

assert_equal(median_finder('petal width (in cm)'), 0.2)

Problem 5: Comparing medians¶

Write a function that takes in two column names from the setosa_data dataset, compares their medians, and returns the name of the column with the larger median.

def median_comparer(column_name_one,column_name_two):

"""

Input

------

column_name_one: a string, the name of the first column

column_name_two: a string, the name of the second column

Output

-------

greater_mean: a string, the name of the column with the greater mean

"""

### YOUR CODE HERE

return greater_median

assert_equal(median_comparer('sepal width (in cm)','sepal length (in cm)'), 'sepal length (in cm)')

assert_equal(median_comparer('petal width (in cm)','sepal length (in cm)'), 'sepal length (in cm)')

assert_equal(median_comparer('petal width (in cm)','petal length (in cm)'), 'petal length (in cm)')

assert_equal(median_comparer('petal width (in cm)','sepal width (in cm)'), 'sepal width (in cm)')

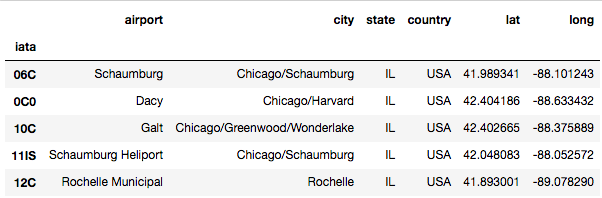

df = pd.read_csv('./airports.csv', index_col='iata')

df.head()

Problem 6: Finding all Airports in a state.¶

For this problem you will find all airports in a given state from the airports dataset.

Finish writing the find_state function in the cell below: The state parameter indicates which state to find all of airports for. The dataframe is passed in as df.

def find_state(df, state='IL'):

'''

df - airports.csv loaded into a dataframe

state - state to find all data for

returns dataframe containing data from a particular state

'''

### YOUR CODE HERE

return df_state

from helper import fs, srt

assert_true(fs(df, state='IL').equals(find_state(df, state='IL')))

# Checking a few more states

assert_true(fs(df, state='IL').equals(find_state(df, state='IL')))

assert_true(fs(df, state='NC').equals(find_state(df, state='NC')))

assert_true(fs(df, state='CA').equals(find_state(df, state='CA')))

Problem 7: Sorting dataframes¶

Finish writing the sort_lat_asc function. It should sort dataframes passed into it by latitude in ascending order.

def sort_lat_asc(df):

'''

df - airports.csv loaded into a dataframe

returns sorted dataframe by latitiude in ascending order

'''

### YOUR CODE HERE

return sorted_df

nc_df = find_state(df, state='NC')

assert_true(srt(nc_df).equals(sort_lat_asc(nc_df)))

md_df = find_state(df, state='MD')

assert_true(srt(md_df).equals(sort_lat_asc(md_df)))

© 2017: Robert J. Brunner at the University of Illinois.

This notebook is released under the Creative Commons license CC BY-NC-SA 4.0. Any reproduction, adaptation, distribution, dissemination or making available of this notebook for commercial use is not allowed unless authorized in writing by the copyright holder.